If you’ve ever sat in on one of our stand-ups, you’ve probably heard acronyms flying around before your coffee’s even cooled. One of the most common is OCR, Optical Character Recognition, and it’s at the core to how we process bank statements at Finch. But what does it do, and more importantly, how will it continue to evolve with the emerging AI-driven approaches?

Farhaad Sallie, our Intermediate Software Engineer, has been digging into this problem for the past few months. He recently walked the team through how our approach is shifting, from rigid templates to context-aware AI, and why it’s a big deal for scaling document processing without burning out on maintenance.

Innovating with AI is about finding the right balance between cutting-edge technology and unwavering reliability. We built our solution on the principle that speed and scalability are meaningless without a foundation of trust and accuracy.

– Farhaad sallie, intermediate software engineer

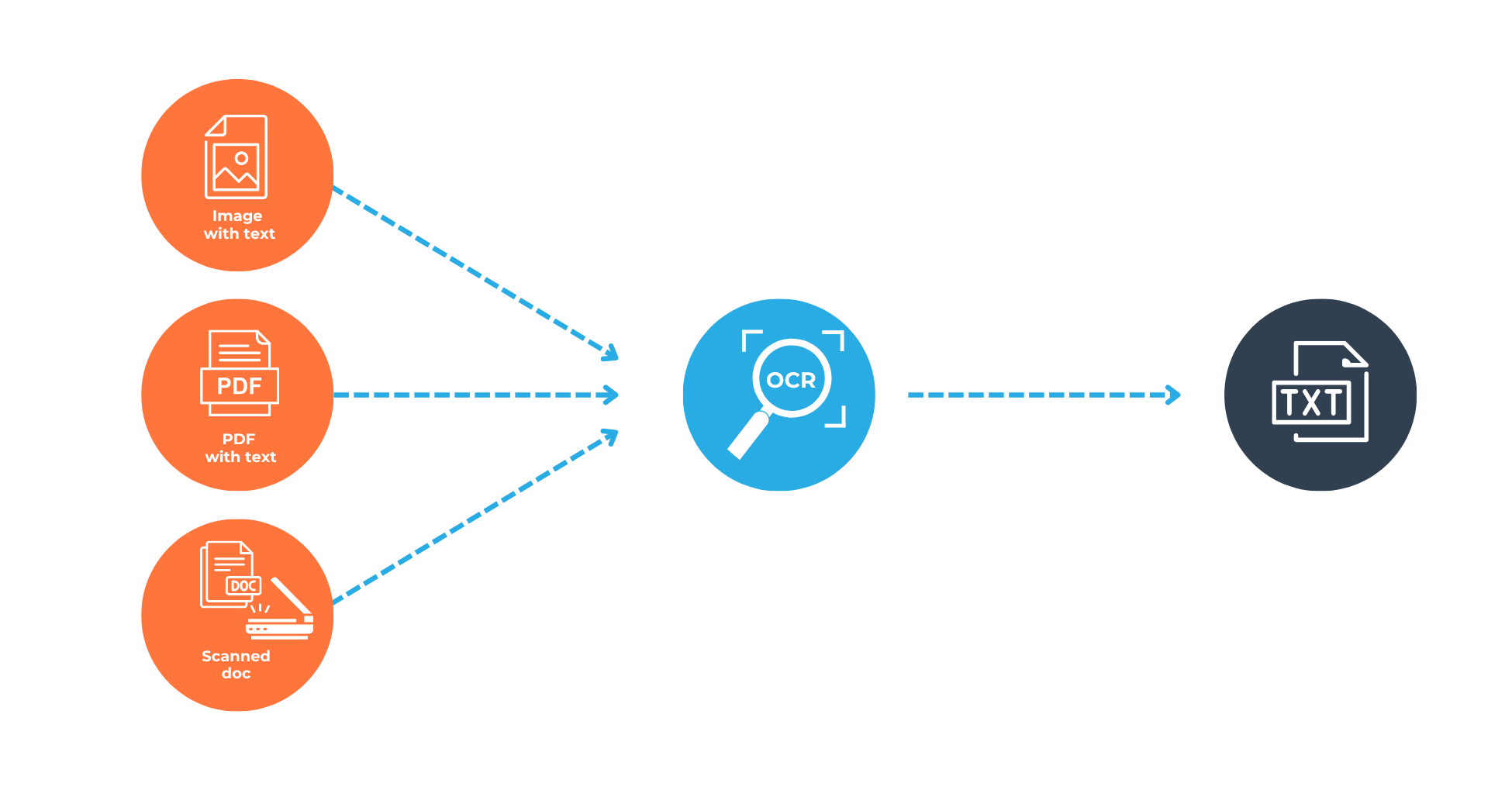

OCR in a nutshell

Optical Character Recognition is the process of turning images of text, whether from scans, PDFs, or photographs, into machine-readable data. Think of it as the first step in converting a document into something searchable and editable, and then ultimately usable. OCR has made it possible for many companies to move from paper-based record-keeping to digital document archives.

For us, OCR underpins our ability to extract information from scanned bank statements. But raw OCR alone only gives us the text, not its meaning, context, or structure. That’s where template-based extraction came in.

Templates: the good, the bad and the brittle

To turn that raw text into usable data, we created templates, basically sets of rules that tell our system where to look for key info like account numbers, balances, and transaction dates. It works like this:

- The system identifies the bank (using logos, headers, keywords).

- Based on that, it assigns the correct template.

- That template guides the extraction, pulling out fields, matching rows, structuring data.

It’s a solid approach… until something changes.

The challenges:

- Design changes constantly. Banks tweak fonts, layouts, or logos, and boom, the template breaks.

- Tedious upkeep. Every format change means manual updates.

- Hard to maintain. More banks = more templates = more overhead.

- Wasted dev time. Engineers end up chasing formatting bugs instead of solving real problems.

So after some careful brainstorming around the engineering table, we asked: what if we could ditch templates altogether?

The Migration: AI-Powered Document Reading

We’ve started exploring smarter ways of understanding documents, ones that don’t rely on fixed rules, but instead “read” and interpret what’s in front of them. That’s where large multimodal models (LLMs) come in.

Don’t tell the system where to look, let it figure out what it’s looking at.

LLMs process both the visual layout (tables, headers, fonts) and the text itself, making sense of what’s on the page and how everything fits together.

Our new approach: smarter, not harder

Here’s how we’ve started rethinking document extraction using AI:

AI Inference

The document is sent to our AI engine, which:

- Picks up layout structures like tables and white space

- Understands what each field means (a date vs. a description vs. a total)

- Links related elements (e.g. matching a transaction amount to the right date)

Structure Output

The result:

- Clean, structured JSON ready for downstream systems

- Auto-validation of fields and logic

- Flags for review if confidence is low

Why this matters (and why we’re excited)

This change unlocks some major wins:

- Adapts on the fly: LLMs don’t need format-specific templates, they generalise across layouts, even unseen ones.

- Easier to maintain: Onboard new banks without the overhead of template design.

- Saves dev time: Less time spent on maintenance means more time for innovation.

We’ve prepared for AI limitations

AI isn’t magic, and especially in finance, accuracy is non-negotiable. They can “hallucinate”, e.g. make up totals that don’t exist. In financial contexts like ours, accuracy isn’t optional.

We’ve implemented robust post-processing validation, including:

- Cross-validation: Do the transactions sum correctly? Do opening and closing balances match?

- Field consistency: Are dates within the statement period? Do account numbers match throughout?

- Anomaly detection: Flag duplicates, outliers, and formatting mismatches.

These safeguards help us build trust in AI-driven extraction, without sacrificing speed or scale.

So, is the industry ready?

Yes (with small caveats), it’s all about having the right controls in place. LLM-based extraction isn’t a plug-and-play solution, but with careful validation and domain logic layered in, it’s production-ready.

At Finch, we’ve found the balance between innovation and reliability, combining next-gen AI with robust validation and business logic.

TL;DR (for the old tops – this means too long didn’t read – so here’s the gist of what we’re trying to say).

- Traditional OCR + Templates: works well, fast and inexpensive, but does not handle changes. Has to be manually maintained

- LLMs + Visual Understanding: Flexible and require little manual intervention, but are slow and costly, and require validation.

If you’re in the business of processing large volumes of scanned or structured documents, especially in sensitive industries like finance, the future is clear: AI is not just capable, it’s necessary.

P.S. The marketing team made me include this, just kidding, our solutions are pretty epic, so it’s a no-brainer to chat to us about them 👇

If you’re interested in exploring how these advancements could optimise your document-heavy workflows, or if you’d like to discuss the unique challenges and opportunities of applying LLMs to real-world data extraction, we invite you to connect with us.